On Scale Webinar Recordings

November 17th, 2011

Recently I gave two webinars on how we handle scale at AppNexus.

Recording of these webinars are now available online! Enjoy: Part I and Part II.

I’d love to hear feedback. What do people agree/disagree with? What are things I didn’t cover you’re curious about?

On Scale Webinar!

October 1st, 2011

I gave a tech-talk on scalability a few months back and due to popular demand will be doing it as a two-part webinar this coming Thursday the 6th and Wednesday the 11th the week after! Details below…

AppNexus CTO Mike Nolet on Scale – A Two-Part Webinar Series

AppNexus co-founder and CTO Mike Nolet will be presenting a two-part series on scalability and ad serving. He’ll lead interactive discussions on how AppNexus built a global infrastructure to manage 14+ billion ads/day, with a deep dive into how AppNexus handles this volume, collects the data, aggregates it and then distributes both log and real-time data back to our customers.

In part one, Ad Serving at Scale, Mike will discuss:

– Designing the core infrastructure for AppNexus’ global cloud platform.

– Load balancing (global/local) 300,000 QPS

– AppNexus’ tech stack and how we manage this scale.

– No-SQL / key-value stores and building a global cookie store with over 1.5 billion keys 150,000 write requests/second.

In part two, Data Aggregation at Scale, Mike will cover:

– Bringing 10TB of data a day back to a single location, 24/7/365.

– Aggregating 10TB of data a day to generate a simple single report for our clients.

– Leveraging technologies like Netezza, Hadoop, Vertica and RabbitMQ to provide top notch data products to our customers.

To register for Part 1: Ad Serving at Scale, on Thursday, October 6th at 11am-12:30PM EST, click here: https://www3.gotomeeting.com/register/983170710

To register for Part 2: Data Aggregation at Scale, on Wednesday, October 12th from 11am-12:30pm EST, click here: https://www3.gotomeeting.com/register/360458342

Mikeonads entering the 2010s… adding Disqus & Twitter

July 17th, 2011

It’s actually sort of sad that I’m a CTO yet the wordpress on my blog basically hasn’t been touched since 2006.

Based on some feedback I’ve now incorporated twitter (#mikeonads) into the blog and also migrated all my comments over to Disqus.

I’m pretty social media retarded so please give some feedback on how to best incorporate twitter into the blog for better discussions!

Tech Talk on Adserving & Scalability Thursday May 18th

May 17th, 2011

This Thursday at 7PM I’ll be presenting a “Tech Talk” here in NYC on scalability & adserving. Specifically I’ll be talking about how we built the AppNexus global infrastructure to manage 10+ billion ads/day with a deep dive into how we collect, aggregate and then distribute both log and real-time data. This is definitely for a technical audience so stay away if bits and bytes aren’t your thing.

Details can be found here. Thanks to AOL ventures for hosting and kudos to Charlie O’Donnell from First Round Capital for organization the event!

Right Media’s Predict

March 23rd, 2011

Over the past few years, the one recurring question I’ve gotten from a number of people is — “Hey, how does Right Media’s predict work?” For obvious reasons I was never really able to answer the question.

Well, the patent we filed back in August of 2007 has finally been issued and is the information is now in the public domain. You can find the full patent here. It’s in lawyer-speak so a bit hard to read, but the diagrams show some interesting things. Of course, this is now four-year-old code & modeling which has certainly been updated so I’m not sure how useful it is, but if you asked me in late 2007/early 2008 how this all worked, now you know! Of course, if you want to rebuild it you’re in tough luck, because it’s patented!

The diagrams are particularly useful for understanding how it all worked. I won’t pretend to be able to explain this well after being completely disconnected from it for four years, but I pulled a few excerpts that are interesting to read for those that lack the patience to interpret lawyer speak.

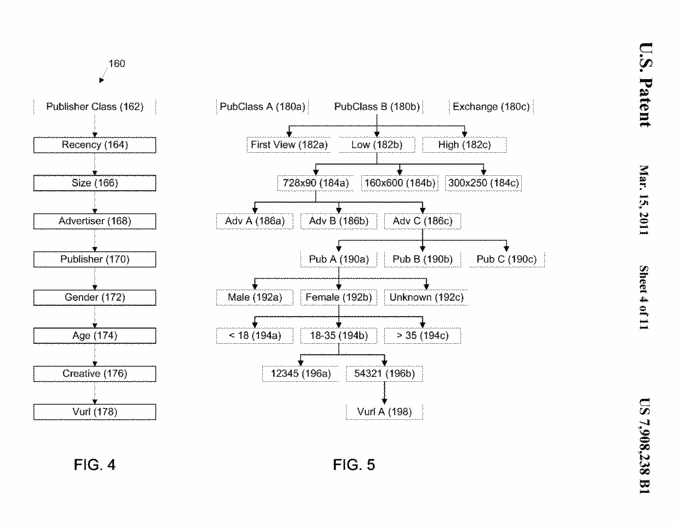

The “tree” model:

Where probability represents the probability of the user taking the respective action for the node, success represents the number of times that the advertisement has been successful in generating the desired response (e.g., a click,a conversion), tries represents the number of times an advertisement has been posted, and probability.sub.parent represents the probability for the parent node on the tree structure (e.g., the node directly above the node for which the probability isbeing calculated). As summarized in table 1 below, what is meant by tries and successes varies dependent on whether the probability being calculated is a click probability, a post-view conversion probability or a post-click conversion probability.

[...]

When a node has a low number of tries, then the probability of the parent node has a greater influence over the calculated probability for the node than when the node has a large number of tries. In the extreme case, when a particular node haszero tries and zero successes, then the probability for the node equals the probability of the parent node. At the other extreme, when the node has a very large number of tries, the probability of the parent node has a negligible impact on theprobability calculated for the node. As such, when the node has a large number of tries, the probability is effectively the number of successes divided by the number tries. By factoring in the parent node probability in the calculation of a node’sprobability, a probability value may be obtained even if the granularity and/or size of the available data set on its own precludes the generation of a statistically accurate probability.

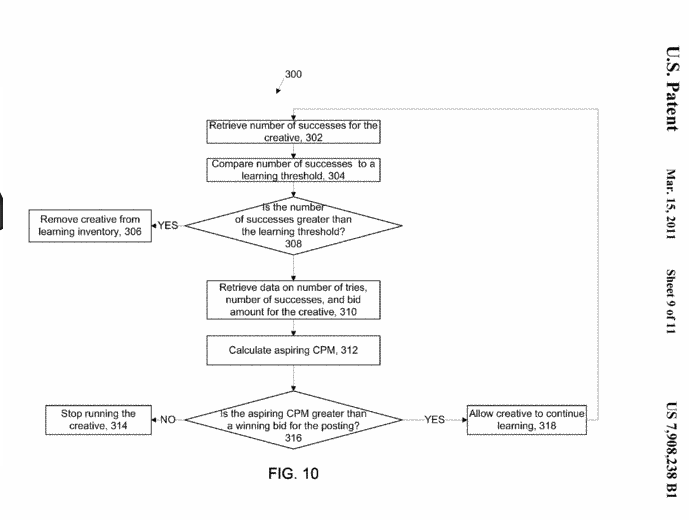

Learning Thresholds / “Aspiring CPMs”

FIG. 10 shows a process 300 for determining whether to continue learning for a particular creative based on the upper and lower limits. The transaction management system 100 retrieves a number of successes for a creative (302) and compares thenumber of successes to a threshold that indicates an upper limit on the amount of learning for a particular creative (304). If the number of successes is greater than the threshold, the transaction management system 100 removes the creative from thelearning inventory (306). Once the creative is removed from the learning inventory, the number of tries and successes generated during the learning period are used to determine a probability that a user will act on the creative (e.g., as describedabove). This probability is subsequently used to generate bids for the creative in response to a publisher posting an ad request.

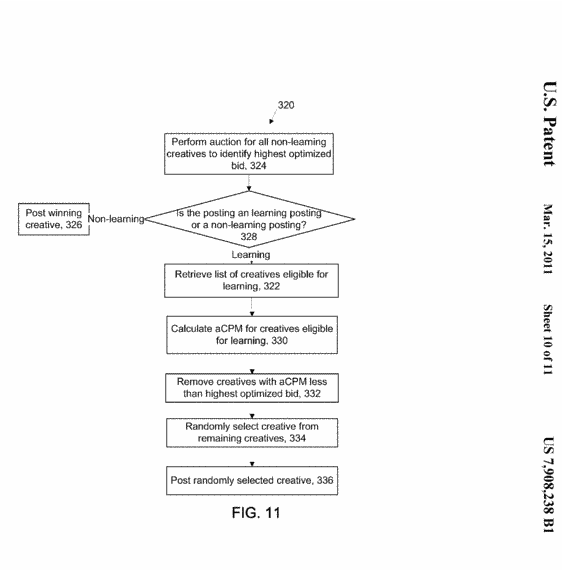

Deciding on a learn vs. optimized creative:

In order for learning to occur, the transaction management system 100 devotes a percentage of the posted advertisements to learning creatives. The allocated inventory for learning is used to allow creatives to receive sufficient impressions togenerate information on the probability of a user taking action when the advertisement is posted. FIG. 11 shows an auction process 320 for selecting an ad creative to be served in responsive to an ad call received by the ad exchange. The transactionmanagement system 100 performs an auction among the non-learning creatives on the ad exchange to identify the highest optimized bid (324). Since a limited amount of inventory is devoted to learning, the system determines whether the ad call is allocatedto learning or is for non-learning (328). If the ad call is for non-learning, then the winning non-learning creative is posted in response to the ad call (326). If the ad call is allocated for learning, then the transaction management system 100retrieves a list of creatives eligible for learning (322). The list of creatives eligible for learning can be determined as described above. For the learning creatives, the transaction management system 100 calculates the aCPM for the creativesincluded in the list of creatives eligible for learning (330). Based on the calculated aCPM, the system 100 removes any creatives for which the calculated aCPM is lower than the highest optimized bid (332). The transaction management system 100randomly selects one of the remaining learning creatives (334) and posts the randomly selected creative in response to the ad call (336).

Gotta love quality ads…

October 28th, 2010

Saw this ad floating around today and I just have to share… “Who Has Better Hair? Justin Bieber or the bear”.

I mean… it even rhymes. Quality quality creative work!

Comments are back on…

September 27th, 2010

I swear I’m technical and a CTO… but for some reason I can’t seem to operate wordpress. Comments were disabled since yesterday on my new post — they’re now back on, so if you had something to say please come back.

Also, I’ve had a few requests for a twitter feed… I’ll try to set that up soon! So hard to keep up with all these web 2.0 technologies =).

-Mike

The Challenge of Scaling an Adserver

April 4th, 2010

So much of our time these days is spent talking about all the new features & capabilities that people are building into their adserving platforms. One component often neglected in these conversations is scalability.

A hypothetical ad startup

Here’s a pretty typical story. A smart group of folks come up with a good idea for an advertising company. Company incorporates, raises some money, hires some engineers to build an adserver. Given that there are only so many people in the world who have built scalable serving systems the engineering team building said adserver is generally doing this for the first time.

Engineering team starts building the adserver and is truly baffled as to why the major guys like DoubleClick and Atlas haven’t built features like dynamic string matching in URLs or boolean segment targeting (eg, (A+B)OR(C+D)). Man, these features are only a dozen lines of code or so, let’s throw them in! This adserver is going to be pimp!

It’s not just the adserver that is going to be awesome. Why should it ever take anyone four hours to generate a report, that’s so old school. Let’s just do instant loads & 5-minute up to date reporting! No longer will people have to wait hours to see how their changes impacted performance and click-through rates.

The CEO isn’t stupid of course, and asks

“Is this system going to scale guys?”

“Of course”

Responds the engineering manager. We’re using this new thing called “cloud computing” and we can spool up equipment near instantly whenever we need it, don’t worry about it!

And so said startup launches with their new product. Campaign updates are near instant. Reporting is massively detailed and almost always up to date. Ads are matched dynamically according to 12 parameters. The first clients sign up and everything is humming along nicely at a few million impressions a day. Business is sweet.

Then the CEO signs a new big deal… a top 50 publisher wants to adopt the platform and is going to go live next week! No problem, let’s turn on a few more adservers on our computing cloud! Everything should be great.. and then…

KABLOOOOOOOOEY

New publisher launches and everything grinds to a halt. First, adserving latency sky-rockets. Turns out all those fancy features work great when running 10 ads/second but at 1000/s — not so much. Emergency patches are pushed out that rip out half the functionality just so that things keep running. Yet, there’s still weird unexplainable spikes in latency that can’t be explained.

Next all the databases start to crash with the new load of added adservers and increased volume. Front-end boxes no longer receive campaign updates anymore because the database is down and all of a sudden nothing seems to work anymore in production. Reports are now massively behind… and nobody can tell the CEO how much money was spent/lost in over 24 hours!

Oh crap… what to tell clients…

Yikes — Why?

I would guess that 99% of the engineers who have worked at an ad technology company can commiserate with some or all of the above. The thing is, writing software that does something once is easy. Writing software that does the same thing a trillion times a day not quite so much. Trillions you ask… we don’t serve trillions of ads! Sure, but don’t forget for any given adserver you will soon be evaluating *thousands* of campaigns. This means for a billion impressions you are actually running through the same dozen lines of code trillions of times.

Take for example boolean segment targeting — the idea of having complex targeting logic. Eg, “this user is in both segments A and B OR this user is in segments C and D”. From a computing perspective this is quite a bit more complicated than just a simple “Is this user in segment A”? I don’t have exact numbers on me, but imagine that the boolean codetakes about .02ms longer to compute on a single ad impression when written by your average engineer. So what you say, .02ms is nothing!

In fact, most engineers wouldn’t even notice the impact. WIth only 50 campaigns the total impact of the change is a 1ms increase in processing time — not noticeable. But what happens when you go from 50 campaigns to 5000? We now spend 100ms per ad-call evaluating segment targeting — enought to start getting complaints from clients about slow adserving. Not to mention the fact that each CPU core can now only process 10 ads/second versus the 1000/s it used to be able to do. This means to serve 1-billion ads in a day I now need 3,000 CPU cores at peak time –> or about 750 servers. Even at cheap Amazon AWS prices that’s still about $7k in hosting costs per day.

Optimizing individual lines of code isn’t the only thing that matters though. How systems interact, how log data is shipped back and forth and aggregated, how updates are pushed to front-end servers, how systems communicate, how systems are monitored … every mundane detail of ad-serving architecture gets strained at internet scale.

Separating the men from the boys…

What’s interesting about today’s market is that very few of the new ad technologies that are entering the market have truly been tested at scale. If RTB volumes grow as I expect they will throughout this year we’ll see a lot of companies struggling to keep up by Q4. Some will outright fail. Some will simply stop to innovate — only a few will manage to continue to both scale and innovate at the same time.

Don’t believe me? Look at every incumbent adserving technology. DoubleClick, Atlas, Right Media, MediaPlex, OAS [etc.] — of all of the above, only Google has managed to release a significant improvement with the updated release of DFP. Each of these systems is stuck in architecture hell — the original designs have been patched and modified so many times over that it’s practically impossible to add significant new functionality. In fact, the only way Google managed to release an updated DFP in the first place was by completely rebuilting the entire code base from scratch into the Google frameworks — and that took over two years of development.

I’ll write a bit more on scalability techniques in a future post!

New York Times article on RTB

March 11th, 2010

Sorry for a bit of self promotion, but, Stephanie Clifford from the New York Times just published a great story on real-time bidding and how AppNexus & eBay have worked together over the past year to dramatically increase ROI with Real-Time buying from companies like Google:

Instant Ads Set the Pace on the Web.

Scary, I’ve been trying to explain to my mother what I do for years, but I think she’ll finally get it after reading this.

Ugh, commenting has been broken

March 4th, 2010

Apologies to all that posted comments on the blog the past 2 weeks, appears that my comment spam filter has marked every comment submitted as spam! I went through a good chunk just now but have about 1500 spam comments to weed through so there are probably a few I’ve missed.

I still have to figure out why the spam filter is broken, but please be aware that comments might take a few hours to show up but aren’t going into a black hole!

-Mike

PS: Normally comments are auto-approved unless they contain too many links …